Principles of assessment and effective feedback

Introduction

Quality medical education requires a commitment and contribution at every level, it is translated in the purposeful investment of resources: time, money and energy in the development of people as they support the institution’s future (1). The goal is for students to practice medicine in a responsible and accomplished service to others (2). To achieve this goal, universities must guarantee that students acquire knowledge and skills, and they have assimilated the values of health profession. This is performed by supervision, which is the monitoring (assessment) and advice (feedback) for personal and professional development. Several definitions suggest that supervision is motivated in providing the best care in the interest of the patient (3).

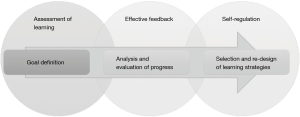

Whether its context is in a classroom based, community clinic or hospital setting, a model must be used to supervise. First the assessment of learning should include the definition of a learning objective which represents the goal which every learner must achieve. Then effective feedback cycles should be implemented consisting of analysis and evaluation of progress. Finally, there should be a self-regulation and continuous improvement stage that requires the selection and re-design of learning strategies (Figure 1).

In the highly dynamic and complex clinical context in which medical education takes place, there is a constant dispute between maintaining the independence of the teaching-learning process and providing effective supervision that assurances patient safety. Several factors or cues influence the decision of the supervisor to trust the learner’s clinical competence. Clinical competence is an integrative concept that describes the skills students must have to approach the patient as a whole. This concept includes the combination of the cognitive process for decision making and procedural skills, the communication skills and empathy, the selection of a cost-efficient treatment and the operation within a health system, and the proposals to improve the quality of life not only of this patient but of the ones that have similar conditions. The development of this competence has proved to be a difficult task for medical centers and universities, as the traditional curriculum is oriented to assess learners’ aptitude to diagnose and treat diseases, not to provide an integral care for the individual or of the population.

The level of difficulty of assessment increases for the supervisor as the level of involvement in teaching in the clinic deepens, as well as the diversity of supervisory structures deployed in the hospital environment. The typical participants in the teaching-learning process in the point-of-care are attending physicians (AP), senior residents (SR), junior residents (JR) and medical students (MS) (4). The participant’s supervision is performed in several structures which include the trainees reporting directly to AP, senior trainees supervise junior trainees, or peer supervision (Table 1). Several studies advocate that the type of structure is based on the institution’s understanding of a theoretical model for supervision, some based on adult learning theories, experiential learning, work-based learning, and apprenticeship (3). All of these models are intrinsically intertwined with institutional policies as they require different levels of commitment of the staff and faculty.

Table 1

| Structure | Participants |

|---|---|

| Trainees reporting directly to attending physicians | AP -> SR |

| AP -> JR | |

| AP -> MS | |

| Senior trainees supervise junior trainees | AP -> SR |

| SR -> JR | |

| JR -> MS | |

| Peer supervision | SR -> SR |

| JR -> JR | |

| MS -> MS |

AP, attending physicians; SR, senior residents; JR, junior residents; MS, medical students.

The ability of trainees to perform in the real world is content-specific, as well as progressive and therefore may be gained through deliberate practice (5). For example, the Royal College of Physicians and Surgeons of Canada (RCPSC) defined the competency by design (CBD) model to describe stages that the physician must transition through their professional development, starting in the “Transition to Discipline” phase and ending in the “Transition out of Professional Practice” level. In these stages they must complete milestones called entrustable professional activities (EPAs) which are tasks defined by the supervisor for a resident to demonstrate competence (6). The EPAs guide the clinical performance of the learner on a daily basis to the overall objective of the educational program where they must perform as competent physicians. The complexity of the EPAs depend on several variables: severity of the symptomatology or epidemiology, urgency of intervention, patient cooperation; quantity and sophistication of infrastructure and available of resources, profiles and experience of the healthcare team, and uncertainty of evolution (7).

Several proposals of supervision discuss the need to involve context-specific competence assessments on different dimensions of the performance of clinical competence. A widely accepted proposal in medical education is Miller’s pyramid that describes the journey from novice to expert through professional authenticity learning experiences (8). This model includes levels of performance in cognition: fact gathering (know) and interpretation or application (knows how), followed by performance in behavior: demonstration of learning (shows) and performance integrated into practice (does). Kennedy, Regehr, Baker and Lingard (4) propose a model more tailored to the in the point-of-care which defines the dimensions of performance as: knowledge, discernment of limitations, truthfulness, and conscientiousness. Knowledge refers to the relevant knowledge and clinical skills, discernment of limitations describes the awareness of the limits of clinical knowledge and skills, conscientiousness is the diligence to follow up through assigned tasks, and truthfulness is the integrity of character of the trainee and absence of deception.

In this article a review of the principles and goals of assessment are presented, followed by the moments and types of available assessment that define the standard to which the learner is advancing. Afterwards, a revision of available evidence of performance and the associated instruments to assess them is presented, which provides a path for the learner to implement learning strategies. This is followed by the self-regulation stage where the efficiency of the methods applied is evaluated, this offers the opportunity for learner to reflect on their performance and develop self-direction for life long-learning.

Assessment of learning

According to Gallardo (9), assessment is the compilation and presentation of evidence that allow to determine the level of learning that students have. This knowledge is achieved through a deliberate strategy, whereby the teacher doses the knowledge to achieve learning objectives, which are established in the curriculum and academic programs. Several authors emphasize that assessment is a continuous process in which to confirm the degree of achievement of the academic objectives by each student (10). Assessment should also be a systematic measure that evidences student’s progress through an objective and fair evaluation supported by the application of structured instruments that allow to classify learner’s performance in different levels of skill.

There are three goals for assessment: (I) direction and motivation for future learning which is defined as the formative assessment; (II) classify competent physicians to guarantee the community the best level of care; and (II) selection strategy in the applicants for advance training, which are part of the summative assessment (6). There is another type of assessment: the diagnostic, which its goal is to provide an initial glimpse of the learners’ strengths and weaknesses to further orient actions and strategies.

However, every type of assessment must be guided by four principles: reliability (11), accuracy (12), objectivity (8), and authenticity. These principles are described in Table 2.

Table 2

| Principle | Definition |

|---|---|

| Reliable | Assessment measures what it is supposed to measure. Must reflect the competency being assessed in a significant, meaningful and worthwhile accomplishment |

| Accurate | There is a link between instruction and assessment. Students learning is dependent of what they perceive is required |

| Objective | The level of competence is not subjective, would be the same independently of the rater that performed the assessment |

| Authentic | Associated to real-life domains |

Goal definition

There are several challenges in assessment, one is the dilemma of choosing the right assessment practice. Assessment implies a reflection on the assessment practice itself, for example: are the goals and objectives per session aligned with the aims and objectives stated in each subject? Do we have evidence of the development of clinical competence as the student progresses in the years of study? Identification of opportunity areas is still needed, this depends on the design and implementation of constant monitoring of the students’ performance.

The measurement of the level of proficiency of the students can be performed in different formats: written test, multiple choice cases, objective structured clinical examination (OSCE) etc. However, one of the most important steps in this assessment process is the establishment or definition of standards or goals that outline three important questions: where am I going? how am I going? and where to next? (13). According to the authors, the definition of appropriate goals and standards, results in motivating challenges that focus students’ attention. The outcomes of the first two questions enunciate the gap between the desired goal and the actual performance.

The definition of a standard should have several parts including: purpose, level of experience, scenario, patient, and technology or resources. These elements are described in Table 3 and examples for an ophthalmology program are given.

Table 3

| Element | Definition | Example |

|---|---|---|

| Purpose | The purpose and objective of the activity is defined based on its character: education, training, performance evaluation, clinical or research. |

The purpose of this activity would be to make a diagnosis. Specifically, the learner must perform the identification of cardinal positions of gaze. They must identify chronic loss of vision |

| Level of experience | It is described in terms of the previous preparation that the participants need to participate in the experience: students, professional career in health sciences, residents, or continuous education. |

Novice residents |

| Scenario | Describe the place where the experience takes place: home or office, school or library, simulation laboratory, external clinic, hospital etc. | Outpatient clinic |

| Patient | It refers to the age of the patient being treated: neonates, infants, adolescents, adults or elderly | Chronic patient |

| Technology or resources | It refers to the resources required: anatomical models, computer or virtual simulation, electronic or real patient. Does the experience require labs or images? |

Perform physical exploration, visual acuity |

Although these standards are mainly defined by the institutions or the faculty as part of the curriculum and may be referred to as educational needs, they may be identified by an individual learner as “learning needs”.

Evidence and instruments

For each assessment a decision must occur to judge the outcomes to show if the candidate has accomplished or met the standard, this decision must remain the same regardless of time or the candidates to whom being compared (14). This is of vital importance in tests administered as licensing or certification of medical competencies (15).

Different individuals participate on the judgment of candidate performance. The participants involved define the assessment as: self-assessment (performed by the same trainee), peer evaluation (performed by other trainee), and hetero-assessment (performed by the supervisor). Traditionally, continuing medical education programs have relied on self-assessment and have being deemed ineffective (16).

Essays, written simulation, bed-side examinations, portfolios etc. are evidence of performance. Rubric, checklists, interviews and observation guides are different instruments which provide a patch for the learner to implement learning strategies, these are presented in Table 4.

Table 4

| EvidenceóWhat proof do I have of the development of competence? | InstrumentóHow can we value the quality of the evidence? |

|---|---|

| Essay, direct observation or video recording, portfolio | Rubric, Global ratings |

| Patient management perspectives (PMP) or written simulation, standardized patients and objective structured clinical examination (OSCE) | Checklist |

| Bed side examination, oral examinations, patient assessments | Structured interview and observation guides |

Effective feedback

For some authors, feedback is the information provided by teachers, peers, experience or books regarding one’s understanding or performance (13), describing its purpose and effects on the teaching-learning process. Other authors imply that feedback is the force which drives learning (5). This reflection on performance provides direction, alternatives, and strategies to arrange the understanding of learning or information that was previously acquired, therefore a context or interpretation must occur. Feedback is provided by teachers, peers, parents etc. whether it is intentionally or not, it is a two-way street and its quality is based on a relationship. Therefore, feedback can be accepted, modified or rejected depending of the quality of this relationship the learner has with its supervisor (13).

One initiative being explored by universities and medical schools is the creation of systems called learning communities or mentoring programs. These groups are made up of health professionals in different stages of their careers, as well as undergraduate students, residents and faculty of all medical specialties, with the objective to build knowledge through permanent dialogue. The aim of this model is centered in the interaction as the base of the educational experience, in which scenarios are generated for the reflection of the participants on its discipline and content of non-formal education.

A model to be highlighted is the one adopted by the Ophthalmology residency program at the School of Medicine and Health Sciences of Tecnológico de Monterrey. This program is focused on training ophthalmology specialists by providing patient-centered care. It involves participants of three levels of experience: novice resident, senior resident, and mentor. The involvement of this actors entails different roles, level of responsibilities and learning objectives, but all gaining knowledge in broad domains of competence. This model based is presented in Table 5 explaining the objective and the responsibility to the patient, and to the training model.

Table 5

| Participant | Learning objective | Responsibility | |

|---|---|---|---|

| Patient | Training model | ||

| Novice resident | Diagnosis and proposes a course of treatment | Obtaining information by conducting exploration and interrogation | • Asks all the questions needed to improve conceptual learning about diagnosis, treatment, and patient interaction |

| Senior resident | Differential diagnosis and definition of an integral care based on the context of the patient | Through clinical judgment filters the information obtained by the novice resident, in order to make an orderly presentation and integration with medical history that describes who the patient is | • Provides feedback to novice resident about the signs and symptoms that might have go unnoticed |

| Mentor (attending physician) | Development of high-impact human resources | Ultimate responsible for patient’s health | • Provides peer to peer feedback to senior resident about alternative treatments or aspects of care |

Analysis and evaluation of progress

Effective feedback is the review of performance where the participants analyze their actions, and improve or maintain future performance, but very often instructors are in the predicament to express their judgments about performance to students or colleagues, and find themselves restraining to express that thoughts to avoid confrontation or causing negative emotions (17). According to Rudolph, Foldy, Robinson, Kendall, Taylor and Simon (18), the classic instructor’s dilemma consist of critiquing performance and jeopardizing the relationship or trust with their learners as the might get defensive or ashamed.

There are some elements or values that are necessary to execute effective feedback, based on the models of Brookhart (19) and Brukner, Altkorn, Cook, Quinn and Nabb (20), and Maestre and Rudolph (17), six principles are proposed in Table 6.

Table 6

| Principles | Brookhart ( |

Brukner, Altkorn, Cook, Quinn and Nabb ( |

Maestre and Rudolph ( |

|---|---|---|---|

| Prompt | Timely | ||

| Contextualized | Positive, clear and specific | Review of goals and expectations | Orient environment |

| Fosters critical judgment | Self-referenced | Student self-assessment prior to supervisor’s feedback | |

| Objective | Focuses on student’s work or processes not on the student | Focus on behavior rather on personality | Personal comfort |

| Based on facts | Descriptive and not judgmental | Specific examples to illustrate observations | Generate trust |

| Propositional | Focuses on strengths and suggestions for next steps | Suggest specific strategies to improve performance | |

| Others | Interim feedback |

The golden standard to provide feedback in the clinical context is debriefing. Debriefing is a conversation in which different people analyze an event to reflect on the actions, roles, emotions and abilities they played, this allows the teacher or supervisor to assess and offer specific recommendations to the performance of each student from an integral perspective. There are several approaches that the supervisor or instructor might take on feedback: judgmental, nonjudgmental and good judgment. The judgmental implies that the instructor knows the truth and the learner is wrong, and needs to be taught a lesson. The nonjudgmental is similar but tries to find the friendliest way to say how to perform to do it the right way. The good judgment is the ones that creates a context based on the learning objectives, and explores the internal meanings and assumptions of the learner, to inquire how to improve.

A basic principle has been proposed by Harvard’s Center for Medical Simulation: all learners are smart, competent, and are concerned with performing a good job.

Self-regulation

Learning can be described as the development of a way of thinking and acting which is characteristic of an expert community. This way of thinking consists of two important elements: the knowledge that represents the phenomenon, and the abilities of thought that construct, modify and use the knowledge to interpret situations and act in consequence. In the context of andragogy theory, adults perform decisions about what they want to learn as well as the method to achieve it (21).

Olivares and López (22) conceive self-regulation as the skill to regulate the own strategies and goals to reach an objective. Self-directed learning is defined by Guglielmino (23) in terms of context, activation and universality. Context describes that learning can occur in various situations ranging from classroom, work or personal interest, they vary from tasks to learning projects developed in response to personal interests or individual or collective needs. Activation considers that there are certain personal characteristics such as attitudes, values and abilities that determine the level of enthusiasm and responsibility for learning objectives, activities or resources. Universality specifies that it exists through a continuum and is present in any human being.

Learning strategies and methods can be applied in several degrees of self-regulation, varying from those strongly regulated by the teacher, those in which there is minimal regulation or regulation being shared by learner and teacher (24). Several authors categorize these strategies as: cognitive, affective and metacognitive (25,26). These categories are described in Table 7.

Table 7

| Learning strategy | Definition | Example |

|---|---|---|

| Cognitive strategies | Action and effect of knowing, collecting, organizing and analyzing ( |

Oriented to knowledge |

| Emotional strategies | Regulate the feelings that arise in learning and lead to an emotional state that affects learning, either positively, negatively or neutrally ( |

Motivation, concentration, self-reflection, generation of emotions and expectations |

| Metacognitive strategies | Regulate the cognitive and affective ones ( |

Monitor that learning goes according to plan, to diagnose the cause of the difficulties and to adjust the learning process when necessary |

In heavily controlled environments, with no place for self-direction, students wait for the teacher to provide directions that they can follow, all advancing on the learning task at the same pace (29). This teaching paradigm is based on the delivery of a program in which the resident must complete several courses and time, in order to advance in his or her training. The focus is on the quality of the education the teacher is providing, not on the quality of the student’s learning. Further, the curriculum approach is from isolated disciplines or independent departments, without interdisciplinary or interdepartmental collaboration (30). This reflection opens the possibility to stop depending on the direction of an expert, and the learning is increasingly self-directed. In addition to the orientation and vision that the student has about his educational experience, an important factor is the teacher’s view and the role that he or she perceives should take. The new roles that must be assumed are: motivator, role-model, activator, monitor and assessor of student learning activities, where the most important thing is that the control over this process must be more shared (30).

Motivation is lost if information is overly simplified, students must be able to work on their own and take the initiative to learn (28). They achieve better results when they find some freedom in that discovery, that exploration fosters the analysis skills that finally allow them to propose new ideas. The preparation of their own learning strategies makes them acquire not only disciplinary knowledge, but habits for life-long learning, as they learn time-management strategies and metacognition.

Although the relevance of the student’s ability to manage his own learning is not recent, and had its first appearance with Knowles’s proposal and the concept of adult education (22), this redefines the competences of an individual to prepare them to face the challenges of the postmodern world.

Salter (29) states that learners should take time to reflect on their learning to determine the next lines of action, providing long-term benefits as they reflect on what is needed and how it can be achieved (31). Since knowledge in medicine is constantly changing and advancing, it is vital to evaluate the disposition and habits of mind of students as self-directed learners (32).

Conclusions

Several general principles for assessment and proving feedback were discussed. The impact of this strategies can be reflected directly into different outcomes: motivation and self-directed learning, efficiency in the implementation of learning strategies, or the integration into a work structure in the clinical setting. However, a key aspect of the assessment and feedback process is that the quality of relationship between supervisor and learner. This is determined by the measure of respect, empathy and trust that the relationship encompasses. If the AP, faculty or senior staff responsible for the supervision process in the clinical settings, overcome the conception that learners cannot endure feedback, or that making mistakes makes incompetent physicians, they will move forward the teaching and learning process of medical specialists. Every action developed by the learners comes from a rationale or frame of thought, part of the responsibility of faculty is to recognize that performance includes minor setbacks that are part of the learning curve.

Given the importance of the assessment processes were a greater depth of thought must be accomplished, a multiple-perspective analysis that integrates the vision of different supervisors, instruments and moments of assessment, must take place to make this process fairer and honest for all kind of learners.

Acknowledgments

We would like to thank Silvia Lizett Olivares Olivares for her invaluable support to strengthen medical education in Mexico by proposing innovative educational models that are transforming medicine in a more human and patient-centered space for learning.

Funding: None.

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editors (Karl C. Golnik, Dan Liang and Danying Zheng) for the series “Medical Education for Ophthalmology Training” published in Annals of Eye Science. The article has undergone external peer review.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/aes.2017.06.10). The series “Medical Education for Ophthalmology Training” was commissioned by the editorial office without any funding or sponsorship. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Sallis E. Total Quality Management in Education. New York: Routledge; 2002.

- Cooke M, Irby DM, Sullivan W, et al. American medical education 100 years after the Flexner report. N Engl J Med 2006;355:1339-44. [Crossref] [PubMed]

- Killminster SM, Jolly BC. Effective supervision in clinical practice settings: a literature review. Med Educ 2000;34:827-40. [Crossref] [PubMed]

- Kennedy TJ, Regehr G, Baker GR, et al. Point-of-Care Assessment of Medical Trainee Competence for Independent Clinical Work. Acad Med 2008;83:S89-92. [Crossref] [PubMed]

- Epstein RM. Assessment in medical education. N Engl J Med 2007;356:387-96. [Crossref] [PubMed]

- Stodel EJ, Wyand A, Crooks S, et al. Designing and Implementing a Competency-Based Training Program for Anesthesiology Residents at the University of Ottawa. Anesthesiol Res Pract 2015;2015:713038 [Crossref] [PubMed]

- Olivares SL, Valdez JE. Aprendizaje Centrado en las Perspectivas del Paciente. México: Editorial Médica Panamericana. 2017.

- Miller GE. The Assessment of Clinical Skills/Competence/Performance. Acad Med 1990;65:S63-7. [Crossref] [PubMed]

- Gallardo KE. Evaluación del aprendizaje - Retos y mejores prácticas. Monterrey: Editorial Digital del Tecnológico de Monterrey; 2013.

- Lavalle-Montalvo C, Leyva-González FA. Instrumentación pedagógica en educación médica. Cir Cir 2011;79:2-9. [PubMed]

- Gulikers JT, Bastiaens TJ, Kirschner PA. A Five-Dimensional Framework for Authentic Assessment. Educ Technol Res Dev 2004;52:67. [Crossref]

- Van der Vleuten CP. The assessment of professional competence: developments, research and practical implications. Adv Health Sci Educ Theory Pract 1996;41-67. [PubMed]

- Hattie J, Timperley H. The Power of Feedback. Rev Educ Res 2007;77:81-112. [Crossref]

- Norcini JJ. Setting standards on educational tests. Med Educ 2003;37:464-9. [Crossref] [PubMed]

- Norcini JJ, Shea JA. The Credibility and Comparability of Standards. Applied Measurement in Education 1997;10:39-59. [Crossref]

- Norman GR, Shannon SI, Marrin ML. The need for needs assessment in continuing medical education. BMJ 2004;328:999-1001. [Crossref] [PubMed]

- Maestre JM, Rudolph JW. Theories and styles of debriefing: the good judgment method as a tool for formative assessment in healthcare. Rev Esp Cardiol (Engl Ed) 2015;68:282-5. [Crossref] [PubMed]

- Rudolph JW, Foldy EG, Robinson T, et al. Helping without harming: the instructor's feedback dilemma in debriefing--a case study. Simul Healthc 2013;8:304-16. [Crossref] [PubMed]

- Brookhart SM. Educational Assessment Knowledge and Skills for Teachers. Educational Measurement: Issues and Practice 2011;30:3-12. [Crossref]

- Brukner H. Giving effective feedback to medical students: a workshop for faculty and house staff. Med Teach 1999;21:161-5. [PubMed]

- Knowles MS, Holton III EF, Swanson RA, et al. Andragogía: El aprendizaje de los adultos. México: Oxford University Press; 2001.

- Olivares Olivares SL, López Cabrera MV. Medición de la autopercepción de la autodirección en estudiantes de medicina de pregrado. Inv Ed Med 2015;4:75-80.

- Guglielmino LM. Why self- directed learning? International Journal of Self Directed Learning 2008;5:1-14.

- Van Eekelen IM, Boshuizen HP, Vermunt JD. Self-regulation in higher education teacher learning. Higher Education 2005;50:447-71. [Crossref]

- Maturano CI, Solveres MA, Macías A. Estrategias cognitivas en la comprensión de un texto de ciencias. Investigación Didáctica 2002;20:415-25.

- Vermunt JD. The regulation of constructive learning processes. British Journal of Educational Psychology 1998;68:149-71. [Crossref]

- Vermunt JD. Metacognitive, Cognitive and Affective Aspects of Learning Styles and Strategies: A Phenomenographic Analysis. Higher Education 1996;31:25-50. [Crossref]

- Javornik M, Ivanus M. Teacher’s conceptions of self-regulated learning a comparative study by level of professional development. Odgojne znanosti 2010;12:399-412.

- Salter D. Cases on Quality Teaching Practices in Higher Education. Pennsylvania: IGI Global; 2013.

- Rodríguez A. El éxito en la enseñanza. México: Editorial Trillas; 2005.

- Shokar GS, Shokar MK, Romero CM, et al. Self-directed learning: looking at outcomes with medical students. Fam Med 2002;34:197-200. [PubMed]

- Wagennar R, González J. Tuning Educational Structures in Europe: Informe Final Fase Uno. Bilbao, Universidad de Deusto Wellcome Trust; 2003.

Cite this article as: Valdez-García JE, López Cabrera MV, Ríos Barrientos E. Principles of assessment and effective feedback. Ann Eye Sci 2017;2:42.