Clinical evaluation exercises and direct observation of surgical skills in ophthalmology

Introduction: workplace-based assessments (WPBAs)

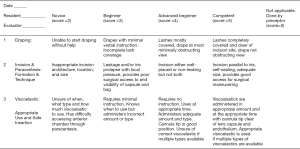

The expression “Teaching does not equal learning” means that competence must be assessed. One cannot assume that just because something is taught, it is also learned. Valid and reliable methods of assessment are thus required to ensure an ophthalmologist can competently evaluate and treat. WPBAs are crucial tools required to authentically evaluate the ophthalmologist’s performance in patient care and surgical skill. WPBAs simply provide a structure to assessing observed skill in the clinic and operating room. Most WPBAs are rubrics which contain “Dimensions”, the skills required to perform a task, “Ratings”, a method to grade the performance and “Descriptors”, an explanation of what it means to achieve a certain rating (Table 1). The dimensions could be the steps of a surgical procedure or components of performing an eye examination. The rating system could be numerical (e.g., 1–4) or a scale such as novice, beginner, advanced beginner and competent. The descriptors are very important from both a teaching and tool reliability standpoint. Descriptors should be a precise description of the behavior expected for each rating. Each rating for each dimension ideally has a description. These descriptors tell the student and the assessor what is expected to achieve a certain rating. Examples of dimensions, ratings and behavioral descriptors are shown in Figure 1, a portion of the ICO-OSCAR:phaco (1). Thus, the student can review the descriptions and learn what is expected. The descriptors standardize the assessment and help assessor’s rate similarly thus producing a more reliable tool.

Table 1

| Dimension | Ratings | |||

|---|---|---|---|---|

| Bad | Better | Good | Excellent | |

| Step 1 of procedure | Behavior description in every rating box | Behavior description in every rating box | Behavior description in every rating box | Behavior description in every rating box |

| Step 2 of procedure | – | – | – | – |

| Step 3, etc. | – | – | – | – |

In this review we will consider existing WPBAs designed to assess clinical skills and surgical proficiency. Another article in this issue will describe the multisource feedback (360-degree assessment) WPBA designed to assess communication skills and professionalism.

Literature search through Google and PubMed was conducted using key words: workplace-based assessment, surgical rubric, clinical skills assessment, ocex, Oscar, ocat, ophthalmic surgical skill assessment.

Clinical evaluation exercises

A variety of clinical evaluation exercises exist for general medical care. This review will describe ophthalmology specific WPBAs. To my knowledge, the Ophthalmic Clinical Evaluation Exercise (OCEX) is the only ophthalmology specific WPBA that has gone through validity and reliability determinations and has been published (2,3). The OCEX is used by an assessor who observes the resident performing an entire history, examination and case presentation. Importantly, a rubric that describes the behavior necessary to achieve each grade on the OCEX was developed. As the assessor observes they simply circle the behavior described in the rubric that is demonstrated by the trainee. When completed, the assessor reviews the rubric with the student as specific formative feedback for improvement. The OCEX has been shown to be a valid and reliable tool for assessing skill in patient care, medical knowledge and communication. It is available on the International Council of Ophthalmology’s (ICO) website in multiple languages (www.icoph.org). However, it was not developed by an international panel and thus the ICO has created and validated an international OCEX that has been submitted for publication.

Paley and associates investigated using the OCEX to discriminate skill levels across years of training and found that it did not help monitoring longitudinal development of resident clinical performance across years of training (4). This is not surprising as the OCEX was designed to evaluate whether a resident can take a history, conduct an examination and provide a management plan. This should be accomplished by the end of the first year (or sooner). The value of the OCEX is assuring the beginning resident can perform these skills and provide important formative feedback to achieve this goal. Langue and associates created and validated the “PEAR”—Pediatric Examination Assessment Rubric to specifically assess the resident’s skills in this area (5). The PEAR is similar to the OCEX but includes more detail and is aimed specifically at testing required for pediatric patients. Reliability studies are still to be completed.

The On-Call Consultation Assessment Tool (OCAT) is a validated type of WPBA that is applied retrospectively during a chart review (6). It addresses several aspects of on-call performance: (I) quality of patient care, (II) consultation timeliness, and (III) the resident’s perception of problem urgency. The OCAT is completed periodically by reviewing on-call consultation notes. The OCAT is then reviewed with the student to provide specific formative feedback. Subsequent OCATs are monitored for improvement.

The UK Royal College of Ophthalmologists (RCOphth) have developed a variety of WPBAs (7). None of these WPBAs have been published in peer reviewed literature and it is unclear what validation process was used. The Clinical Rating Scales (CRS) and Direct Observation of Procedural Skills (DOPS) both are used for assessing clinical skills. There are 11 CRS forms that assess each part of the 8-step eye examination plus retinoscopy, Amsler grid and history taking. The CRS are rubrics with grading scale of poor, fair, good and very good. They include descriptions of what behaviors constitute “poor” and “very good.” However, no descriptions are given for fair and good. Thus, this leaves quite a bit of interpretation to the assessor. The DOPs are used for a wide variety of skills such as ocular irrigation, lacrimal function, local anesthesia, etc. There is one DOPS form and the type of procedure is written in at the top. The rating scale is the same as the CRS (poor to very good) but there are no behavioral anchors describing what it means to achieve each rating.

Direct observation of surgical skills

Trainees are often evaluated by an assessor who observes a surgical procedure and provides feedback. Well-written rubrics are an essential component of proving high quality feedback.

Cremers and associates developed the “Global Rating Assessment of Skills in Intraocular Surgery” (GRASIS) and showed face and content validity (8). The GRASIS includes scores from 1–5 for skills preoperative knowledge, microscope use, instrument handling, and tissue treatment in addition to seven other areas. Behavioral descriptors were written for gradings of 1, 3 and 5 leaving ratings 2 and 4 to be determined by the evaluators. Cremers and associates also developed the “Objective Assessment of Skills in Intraocular Surgery” (OASIS) (9). This tool facilitates collation of objective data such as wound placement and size, phacoemulsification time, and total surgical time and is meant to complement the GRASIS. They showed that the OASIS had both face and content validity.

Feldman and Geist developed the Subjective Phacoemulsification Skills Assessment that is designed specifically for phacoemulsification cataract extraction (PCE) surgery (10). This tool looks at each individual step of PCE in addition to rating overall performance. The evaluator rates on a 1–5 scale from ‘strongly agree’ to ‘strongly disagree’. They were able to show a degree of inter-rater reliability.

The UK RCOphth WPBA Handbook also describes several Objective Structured Assessments of Surgical Skill (OSATS) (7). OSATS 2 and 3 are specifically for use of the operating microscope and aseptic technique. OSAT 1 is generic for all surgical procedures. Thus, there are no procedure specific behavioral descriptors to standardize the assessment or to teach the student.

Saleh and associates developed the “Objective Structured Assessment of Cataract Surgical Skill” (OSACSS) (11). Phacoemulsification surgery is divided into 20 separate steps and each step is scored using a 5-point Likert scale. There is a rudimentary but very subjective rubric that assigns the following descriptions to 3 of the 5 Likert ratings: 1= “poorly or inadequately performed”, 3= “performed with some errors or hesitation” and 5= “performed well with no prompting or hesitation.” Scores of 2 and 4 are left to the evaluator to determine. This creates a degree of subjectivity and leads to lower reliability of the tool. Recognizing this, an international panel of authors modified the OSACSS by producing a globally-applicable rubric with levels based on a modified Dreyfus model of skill acquisition (novice, beginner, advanced beginner, and competent). No expert level was included considering that trainees do not achieve this level during their training. Behavioral anchors or descriptions of behavior representing each scoring level of each step were written to try and decrease subjectivity and provide instruction to trainees as to what is expected (Figure 1). Validity and inter-rater reliability were established by international panels of experts (1,12). To my knowledge, the ICO-OSCARs are the only WPBAs developed and validated for international use.

In a similar fashion internationally applicable assessment tools for many commonly done ophthalmology surgeries were developed and validated (13-21). ICO-OSCARS are being developed for intravitreal injection, corneal transplant, selective laser trabeculoplasty, dacryocystorhinostomy, DMEK, DALK, DSAEK, pterygium, glaucoma tube shunt, ruptured globe, and pediatric glaucoma procedures. The ICO-OSCARs serve as a toolbox of internationally applicable, valid and reliable assessment tools for surgical skill that of clearly communicate to the learner what is expected to attain competence.

Summary

It is essential to have methods demonstrating the ability of our trainees to competently perform the skills required of an ophthalmologist in the real world. WPBA must be used in addition to traditional tests of medical knowledge such as multiple-choice questions, oral examinations and objective structured clinical examinations. There are an increasing number of validated WPBAs available for clinical and surgical skill.

Acknowledgments

Funding: None.

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editors (Ana Gabriela Palis and Jorge E. Valdez-García) for the series “Modern Teaching Techniques in Ophthalmology” published in Annals of Eye Science. The article has undergone external peer review.

Conflicts of Interest: The author has completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/aes.2019.07.03). The series “Modern Teaching Techniques in Ophthalmology” was commissioned by the editorial office without any funding or sponsorship. KCG serves as an Associate Editor-in-Chief of Annals of Eye Science. The author has no conflicts of inerest to declare.

Ethical Statement: The author is accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Golnik KC, Beaver H, Gauba V, et al. Cataract surgical skill assessment. Ophthalmology 2011;118:427.e1-5. [PubMed]

- Golnik KC, Goldenhar LM, Gittinger JW Jr, et al. The Ophthalmic Clinical Evaluation Exercise (OCEX). Ophthalmology 2004;111:1271-4. [Crossref] [PubMed]

- Golnik KC, Goldenhar L. The ophthalmic clinical evaluation exercise: reliability determination. Ophthalmology 2005;112:1649-54. [Crossref] [PubMed]

- Paley GL, Shute TS, Davis GK, et al. Assessing Progression of Resident Proficiency during Ophthalmology Residency Training: Utility of Serial Clinical Skill Evaluations. J Med Educ Train 2017;1.

- Langue M, Scott IU, Soni A. The Pediatric Examination Assessment Rubric (PEAR): A Pilot Project. J Acad Ophthalmol 2018;10:e127-32. [Crossref]

- Golnik KC, Lee AG, Carter K. Assessment of ophthalmology resident on-call performance. Ophthalmology 2005;112:1242-6. [Crossref] [PubMed]

- The Royal College of Ophthalmologists Ophthalmology Specialty Training Workplace Based Assessment Handbook for OST. Available online: https://www.rcophth.ac.uk/wp-content/uploads/2014/11/WpBA-Handbook-V4-2014.pdf. Accessed June 29, 2019.

- Cremers SL, Lora AN, Ferrufino-Ponce ZK. Global Rating Assessment of Skills in Intraocular Surgery (GRASIS). Ophthalmology 2005;112:1655-60. [Crossref] [PubMed]

- Cremers SL, Ciolino JB, Ferrufino-Ponce ZK, et al. Objective Assessment of Skills in Intraocular Surgery (OASIS). Ophthalmology 2005;112:1236-41. [Crossref] [PubMed]

- Feldman BH, Geist CE. Assessing residents in phacoemulsification. Ophthalmology 2007;114:1586. [Crossref] [PubMed]

- Saleh GM, Gauba V, Mitra A, et al. Objective structured assessment of cataract surgical skill. Arch Ophthalmol 2007;125:363-6. [Crossref] [PubMed]

- Golnik C, Beaver H, Gauba V, et al. Development of a new valid, reliable, and internationally applicable assessment tool of residents' competence in ophthalmic surgery (an American Ophthalmological Society thesis). Trans Am Ophthalmol Soc 2013;111:24-33.

- Golnik KC, Haripriya A, Beaver H, et al. Cataract surgery skill assessment. Ophthalmology 2011;118:2094-2094.e2. [Crossref] [PubMed]

- Golnik KC, Gauba V, Saleh GM, et al. The ophthalmology surgical competency assessment rubric for lateral tarsal strip surgery. Ophthalmic Plast Reconstr Surg 2012;28:350-4. [Crossref] [PubMed]

- Golnik KC, Motley WW, Atilla H, et al. The ophthalmology surgical competency assessment rubric for strabismus surgery. J AAPOS 2012;16:318-21. [Crossref] [PubMed]

- Motley WW 3rd, Golnik KC, Anteby I, et al. Validity of ophthalmology surgical competency assessment rubric for strabismus surgery in resident training. J AAPOS 2016;20:184-5. [Crossref] [PubMed]

- Swaminathan M, Ramasubramanian S, Pilling R, et al. ICO-OSCAR for pediatric cataract surgical skill assessment. J AAPOS 2016;20:364-5. [Crossref] [PubMed]

- Golnik KC, Law JC, Ramasamy K, et al. The Ophthalmology Surgical Competency Assessment Rubric for Vitrectomy. Retina 2017;37:1797-804. [Crossref] [PubMed]

- Green CM, Salim S, Edward DP, et al. The Ophthalmology Surgical Competency Assessment Rubric for Trabeculectomy. J Glaucoma 2017;26:805-9. [Crossref] [PubMed]

- Juniat V, Golnik KC, Bernardini FP, et al. The Ophthalmology Surgical Competency Assessment Rubric (OSCAR) for anterior approach ptosis surgery. Orbit 2018;37:401-4. [Crossref] [PubMed]

- Law JC, Golnik KC, Cherney EF, et al. The Ophthalmology Surgical Competency Assessment Rubric for Panretinal Photocoagulation. Ophthalmol Retina 2018;2:162-7. [Crossref] [PubMed]

Cite this article as: Golnik KC. Clinical evaluation exercises and direct observation of surgical skills in ophthalmology. Ann Eye Sci 2019;4:26.