Psychophysics in the ophthalmological practice—I. visual acuity

Introduction

Visual acuity (VA) refers to the capacity to recognize the spatial characteristics of a stimulus. The Consilium Ophthalmologicum Universale defines VA as the visual system’s ability to resolve spatial details. Which details depend on the type of symbol (called optotype) used for measuring this function: for example, in the Landolt’s charts the detail to be resolved is the gap along the contour of a ring.

The formal definition of VA relies on the concept of angular size, that is the angle subtended by an object at a given viewing distance. For example, the visual angle of the width of the thumb held at arm’s length is about 2 degrees.

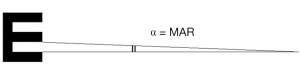

VA corresponds to the inverse of the minimum angular size of the detail within the just-resolvable configuration expressed as min arc [minimum angle of resolution (MAR)]: the lower the MAR, the higher VA. Since a logarithmic scale is more appropriate as it makes the decrement in size of the optotypes uniform across the range of measurement, VA is generally measured as the logarithm of the MAR (logMAR).

VA recruits a resolution or a more complex recognition task. A resolution task aims to detect the details of a configuration, i.e., the details that characterize a symbol like a “C” or an “E”: detecting the details of a configuration, in fact, means resolving the configuration into its high-frequency components. The type of threshold measured by a resolution task is a detection threshold. It is worth recalling that the detection threshold measures the ability to detect the transition from a state of no stimulation to a state of stimulation. This takes place, for example, in perimetry (the procedure aimed at assessing the integrity of the visual field), when the observer is asked to report the sudden onset of a spot of light presented on a background (1). Incidentally, a second type of threshold is discrimination threshold. Discrimination threshold measures the ability to differentiate a state of stimulation from another state of stimulation (1). Discrimination threshold is therefore the just noticeable difference between two different states of stimulation. For example, given as the independent variable the width of the gap of a Landolt “C”, discrimination threshold is the just noticeable difference between “Cs” with slightly different gap width (the other characteristics of the stimulus are kept constant). In sum, in a discrimination task, a comparison is made between different states of stimulation whereas in a resolution task, this is not the case. Signal detection theory is a valid approach to these issues. According to signal detection theory (SDT: Green & Swets, 1966), discrimination (and detection) thresholds results from the capacity of the visual system to extract the signal from noise (2). In other terms, detection and discrimination thresholds depend on the sensory evidence of a signal (the stimulus), that is given by the signal and by a certain amount of noise intrinsically intermingled to it, and a subjective criterion (SC), unconsciously set by the observer. The subjective criterion can be: more “liberal”, when the observer is more inclined to answer “yes, I see it” or “yes, I discriminate it” for signals producing a relatively low degree of sensory evidence; more “conservative”, when he or she is more inclined to answer “no, I do not see it” or “no, I don’t discriminate it” for signals producing a relatively high degree of sensory evidence. The importance of this approach is that, in computing a threshold (be it detection or recognition), SDT assumes that the strength of sensory evidence for a signal as well as the subjective criterion of the observer may change from trial to trial and across individuals.

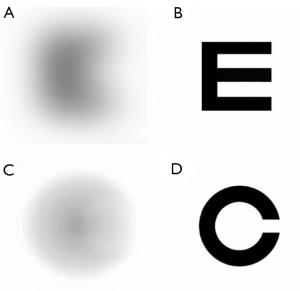

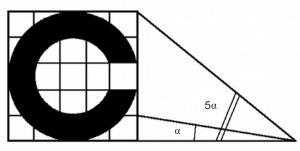

Based on the definition of detection threshold of Aleci (1), the resolution task would measure a detection threshold, as it relies on the ability to notice (detect) the difference between a state of non-stimulation (absence of details like a gap along a ring or of three stacked bars) and a state of stimulation (presence of details like a gap along a ring or three stacked bars). Evidently, detecting the details of a configuration means resolving the characteristics of the signal (Figure 1).

To better clarify this statement, we suggest that visual acuity relies on a two-level process of detection of spatial frequencies. Given a serial pattern (e.g., a grating of alternating black and white bars), spatial frequency corresponds to the number of repetitions per space unit (cycles/degree, where 1 cycle is made up of a black bar plus a white bar). More generally, spatial frequency is the number of cycles/deg of a grating that can be imagined to resolve any structured object. For example, a confusing letter, perceived as a blurry spot, has a spatial frequency of a grating whose width of the bars is equal to the rough width of the spot. In a sharp letter, high-frequency components have the spatial frequency of a grating with the width of the bars equal to the width of the stroke of the letter.

When a ring with a gap is presented, the first step for its resolution is noticing the transition from a state of non-stimulation (no stimulus) to a state of stimulation (a blurry spot). This means the lowest frequency component of the stimulus is detected. The second step is noticing the transition, within the configuration, from a state of no-stimulation (no details in the blurry spot) to a state of stimulation (the spot is a ring with a gap). This means the high frequencies of the stimulus are detected.

In sum, a detection task evaluates the detection threshold of a global configuration (the lowest spatial frequency of the stimulus) and a resolution task measures the detection threshold of the high spatial frequency components of a configuration, which are its details. Resolution is therefore expressed as the minimum distance of the details of the signal that allows their identification (minimum separable or resolving power).

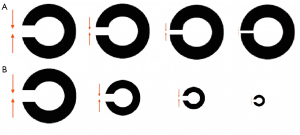

For example, consider a high contrast annular stimulus of diameter d and thickness t. The observer is required to determine if there is a break a in the ring. The variable under examination is, therefore, the angular amplitude a. The target stimuli correspond to rings in which a changes by a predetermined step size; non-interrupted rings (null stimuli) are added to check for false-positive responses.

The task is reporting whenever the gap of the ring, namely the variable a that characterizes the signal (its high-frequency component), is detected: this way, the threshold ae below which a is no longer detected is obtained. If the subject does not detect a, he/she cannot resolve the signal.

In clinical practice, except for illiterate patients or pre-school children, visual acuity is generally measured through a recognition task. Unlike the simpler resolution task, recognition implies the identification of signs with semantic content, like letters or digits. Resolution is necessary but not sufficient for the recognition task, in other terms for identifying a letter or a digit as that letter or that digit. The recognition of the sign “V” (two upward diverging strokes) as the letter “V” requires not only resolving its elementary constituents (resolution task) but also matching the sign with the corresponding letter stored in the semantic memory. A recognition task relies therefore on additional cognitive functions that are not necessary to the resolution task.

Within the research setting, visual acuity can be investigated with non-adaptive or adaptive procedures. The method of constant stimuli (n sets of stimuli presented in a randomized order) belongs to the first class. In each set, the size of the stimuli is constant and the only difference lies in their features, like, for example, the width (and position) of the gap in “C” stimuli. The method of constant stimuli is the most reliable way to compute the psychometric function. The psychometric function is a sigmoid curve generated by the psychophysical response to a stimulation. This function expresses the relationship between the probability to perceive a stimulus and its intensity. As the intensity increases, the subject is more likely to notice the stimulus and the threshold is computed from the level that corresponds to a probability equal to or higher than 50% (target probability). In the visual domain the function is called frequency-of-seeing curve (FOSC). Furthermore, the method of constant stimuli is the most straightforward way to provide information about the dispersion of the data around the threshold and to reduce non-stationary responses (trials, in fact, are randomized). Yet, since it requires a high number of trials, it is time-consuming, thereby unsuitable for clinical purposes. A faster alternative is the method of limits, where series of stimuli with decreasing, then increasing intensity are administered, and the threshold is computed as the intensity at which a reversal in the trend of the responses (from correct to incorrect and vice versa) is observed. Arguably, the drawback of the non-adaptive procedures is low efficiency due to the high number of trials. Adaptive nonparametric procedures like the staircase methods or the modified binary search (MOBS), and, even more, adaptive parametric strategies like Best PEST, QUEST, or ZEST (where the next stimulus intensity is determined from all the previous observer’s responses and the parameters, i.e., shape and slope of the psychometric function, are assumed to be known.) overcome this problem. Parametric adaptive strategies tend to converge faster onto the threshold from the very beginning of the examination, greatly reducing the number of trials. Even if this leads to high efficiency, patients, who are generally inexperienced observers, may have difficulty familiarizing themselves with the procedure [see (1) for a review].

The procedure can be coupled with a yes/no or an alternative forced-choice (n-AFC) design: in the first case, the observer is asked to report if he can resolve the stimulus and the target probability φ (the probability of perceiving ae) should be equal to or higher than 50%.

In the second case consider the example of a ring that contains the break (target) and one or more uninterrupted rings (null stimuli). The observer is forced to identify the target among the n-alternatives. The intervals can be administered simultaneously (spatial paradigm) or sequentially (temporal paradigm). The target probability will be greater than 0.5 and will depend on n and the adopted psychophysical procedure.

The reliability and repeatability of visual acuity measurements depend on many factors, namely:

- The luminance of the background;

- The amount of contrast of the signal, i.e., the difference in luminance between signal and background [the term signal, borrowed from the signal detection theory (SDT), identifies a stimulus characterized by an informational content];

- The way stimuli are administered (for instance alone or flanked: the distance between flanked stimuli is relevant since lateral masking may bias their recognition. Lateral masking (or visual crowding) is the detrimental effect of flanking letters on the recognition of a character when the former are so close to falling within a spatial interval, called critical spacing. The effect of crowding on visual acuity is therefore enhanced by reducing the distance between neighboring letters (3);

- The type of symbols or, in the case of alphanumeric symbols, the font;

- The psychophysical procedure;

- The pupil size of the observer;

- The psychological and physical conditions of the observer;

- The cognitive function or collaboration of the observer.

Landolt’s charts (Landolt’s C)

Landolt’s Cs are annular stimuli with an interval placed in one of the four cardinal positions.

The Landolt’s charts, suitable to estimate visual acuity in illiterate or non-cooperating subjects, represent rows of stimuli, each made up of rings the same size. Starting from the upper portion of the chart, each line differs from the previous one for the smaller size of the rings. The angular size of the interval is proportional to the size of the ring (diameter and thickness of the stroke) so that the ratio between the width of the gap and the size of the ring is constant. The minimum angle of resolution of the gap provides the VA value.

The procedure is compatible with the method of limits associated with a 4-AFC response model, implicit variant. Aleci (1), indeed, distinguished between standard n-AFC, in which the alternatives are presented and the subject is forced to judge the correct one, and implicit n-AFC. In the implicit procedure, the observer is informed about the possible alternatives (in the case of the Landolt’s C the interruption of the ring can be up, down, to the left, or to the right: 4-AFC) and a stimulus (e.g., a ring with the gap on the superior part) is administered at each trial. The subject must choose which one of the mentioned alternatives matches the displayed stimulus. The procedure differs from the classical method of limits since a block of n presentations per signal level (and not a single stimulus) is presented. The observer, informed about the four possible positions of the interval, is forced to report verbally the position of the gap of each symbol displaced along each line (starting from the upper one). The acuity threshold corresponds to the line at which the observer recognizes 62.5% of presentations.

A version of Landolt’s chart with 8 orientations is available. The response method is therefore 8-AFC and the probability to respond correctly by chance is 12.5%. The target probability should therefore be at least (100%+12.5%)/2=56.25%.

Although the assessment of VA with Landolt’s charts resembles the example reported in the introduction, there is a substantial difference: in the latter the diameter of the ring is constant. It is therefore questionable whether the acuity measured in the two test conditions is the same. It is arguable that if the diameter of the ring remains constant and the only variable is the width of the gap, the estimate operates only on a local scale, while there is no global processing: both instead occur in the clinical practice, in which the global (the whole stimulus) and local configuration (the gap) covary (Figure 2).

The tumbling E charts (“illiterate” E)

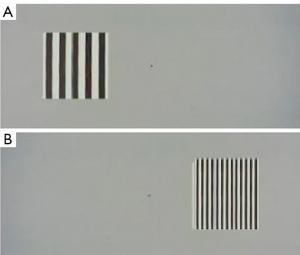

The tumbling E for the assessment of VA in illiterates recruits a perceptual task similar to that of Landolt’s charts. The observer is asked to report the cardinal orientation (above, below, left, or right) of an “E” in which the thickness of the stroke and the width that separates the horizontal bars are 1/5 the size of the symbol. The minimum separable concerns the three horizontal bars: if the three horizontal bars are not resolved, their orientation with the vertical bar cannot be established (Figure 3).

The ability to report a difference between a null stimulus and the target (detection task) is tested: in the Landolt’s charts, the null stimulus corresponds to a ring with no interruption, in the tumbling E it is comparable to a stimulus with a spatial frequency lower than that of the bars (see Figure 1). How much lower, i.e. how much lower is the VA, in absence of ophthalmological alterations arguably depends on the refractive state, therefore on the blur ratio (BR) of the observer (4,5). A blurred configuration like an E is perceived as a series of contiguous superimposed circles of confusion formed by the strokes. Given that the acuity threshold depends on the relative size of these circles, the BR corresponds to the ratio between the diameter of the circle and the size of the retinal image of the E. The higher the circle’s size compared to the retinal image, the higher BR, therefore the less resolvable is the stimulus. Optotypes can be recognized for a BR up to 0.5 (4). Based on these optical criteria, the configuration cannot be resolved if the perceived size of the blurred stroke is more than ½ the size of the whole symbol.

The identification of a symbol like “E” depends not only on the BR but also on the cutoff object frequencies. Each symbol, in fact, contains a band of spatial frequencies (designated in cycles per letter) that is particularly effective for its identification (6). These object frequencies mediate letter recognition and are indicative of the acuity threshold. In this respect, a symbol contains a broad spectrum of different spatial frequencies and the visual system can split the information into these frequencies, rather than encoding the proper elementary features. These components are processed by selective neuronal channels via a Fourier-like analysis and are integrated into the cortex to reconstruct the whole picture (7). The object frequencies used for letter recognition are within the range of 1–3 cycles per letter (8,9). They depend both on the adopted symbol [e.g., Landolt C or tumbling “E” (10)] and on the angular dimension, so the identification of small letters should rely mainly on low frequencies (i.e., their gross components) while the identification of large symbols on high-frequency components [i.e., their edges (11,12)].

As explained in the introduction, the process is made of two steps: the first step consists of the detection of a stimulation: the observer notices the interruption of the no-stimulation state, that is to say, the presence of a stimulus. In this phase, even if he or she becomes aware of the presence of a signal, he is unable to resolve it. The second step is the detection of the details of the signal, in other terms it is the resolution of the stimulus: the stimulus is recognized as a structured pattern. The first phase corresponds to the detection of the low spatial frequency component of the stimulus (“I see that there is something but I don’t know what it is”), the second phase, of interest in VA assessment, involves the detection of the spatial cut off frequency that allows the resolution of the configuration. For sake of precision, as recalled by an anonymous reviewer, if the lowest frequencies are in the falling left hand part of the contrast sensitivity function, some intermediate frequency, closer to the peak, would be more detectable.

As for Landolt’s chart, measuring VA with the “illiterate” E makes use of a combination of the method of limits and the method of constant stimuli coupled with a 4-AFC response method, implicit variant. The intensity of the signal (its size) is progressively decreased according to the method of limits, but instead of a single target per intensity, blocks of n presentations with the same signal level are displayed in each line, as it occurs in the method of constant stimuli. The threshold corresponds to the line at which the observer correctly recognizes 62.5% of presentations.

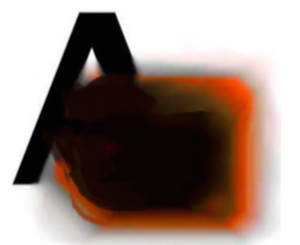

Alphanumeric charts

The most common method for the assessment of VA in literate subjects makes use of charts with alphanumeric symbols. In this case, the task is not limited to the detection and resolution of the target but relies on a subsequent step: the recognition of letters or numbers. Despite the recognition task with alphanumeric symbols is expected to be more complex than with Landolt Cs and tumbling “E” because it implies the mental association of the presented configuration with a known symbol, Sloan showed that letters acuity is substantially equivalent to Landolt’s C measurement (13). More recently, visual acuity measured with Landolt’s Cs was found to be slightly lower than with letters (14,15): maybe the cognitive component that comes into play with alphanumeric symbols helps identification in the presence of mild perceptual deterioration. In fact, the recognition of a letter can take place by approximation or via a Bayesian-like probabilistic mechanism in blurred conditions or when part of the signal is missing (Figure 4). Evidently, this top-down strategy cannot be employed when VA is measured with Landolt’s C (16).

Visual acuity measured with Landolt’s Cs is lower than acuity estimated with the E charts, too (17-20).

The difference depends on the spatial characteristics of the stimuli. Landolt rings contain a single gap whereas “Es” have two gaps with a lattice-like spatial configuration: in case of signal deterioration, the Landolt’s C appears as a low spatial frequency circular spot so that it is not possible to localize the position of the gap (null stimulus, see Figure 1). On the contrary, in the tumbling E, the horizontal or vertical arrangement of the bars can still be determined with approximation, increasing from 25% to 50% the chance of guessing (21). For this reason, the tumbling Es should be scaled by 15% compared to the Landolt’s Cs to provide similar estimates (22).

Reich and Ekabutr found that the two paradigms differ not only for the threshold but also for the slope of the psychometric function, steeper for the E charts compared to the Landolt’s C (18). The slope, indeed, is the dispersion parameter, so a steeper slope indicates less dispersion of acuity values as a function of the proportion of correct responses: it follows that E charts are more accurate than Landolt’s rings.

When visual acuity is measured with alphanumeric charts, three possible scenarios are expected:

- The detection of the features of the stimulus and the association with the symbols learned during childhood and stored in memory are effective: the stimulus will be recognized. It is worth recalling that some letters, namely those with no edges like “O” or “C”, are more difficult to identify (14,23);

- The detection of the features of the stimulus is defective: the stimulus will be seen blurred, thereby confused with other stimuli;

- The detection of the features of the stimulus is effective but the associative process is defective for central dysfunctions (e.g., agnosia): even if the subject recognizes the symbol, he/she will not be able to name it. In this case, objective methods such as the Oktotype (see below) may be helpful.

The psychophysical procedure commonly used is a mix of the method of limits and constant stimuli, with progressively smaller rows of letters that must be inspected one at a time. Since the subject is forced to name the symbol even when he/she is unable to recognize it, the response model is n-AFC, implicit version, where n is the number of choices. The Latin alphabet has 26 letters: the response model is, therefore, a 26-AFC and the probability to guess by chance is 3.8%. Therefore, the target probability should be equal or higher than (100%+3.8%)/2=51.9%. To be noted that N-AFC, in its implicit version, assumes that the observer knows what are the possible alternatives so to decide which one of these alternatives matches the displayed stimulus. Even if the possible subset of choices in the Sloan and Snellen chart is 9 and 10, respectively, the observer is not informed by the operator that the presented stimuli will not be selected from the whole pool of 26 letters of the alphabet. So, the alternatives for the observer remain 26.

For digits [0–9], the response model is 10-AFC: the probability to guess by chance is 10%, then the target probability should be equal or higher than (100% + 10%)/2=55%. The threshold is generally taken as the line whose recognized symbols are more than 50%.

Standardization criteria according to the Consilium Ophthalmologicum Universale

Visual acuity should be measured in photopic conditions (160 cd·m−2) with high-contrast levels (dark stimuli on a white background). The standard optotype is the Landolt C. The thickness of the ring and the width of the gap are 1/5 of the outer diameter (Figure 5).

VA refers to the smallest optotype identifiable by the patient at a certain distance. It can be expressed as the reciprocal of the angular size of the gap (or of the thickness) of the smallest resolvable optotype at a given distance: this is, in fact, the inverse of the MAR. If the gap of the smallest resolvable Landolt’s C subtends 1 min arc (considered as the minimum angle of resolution in normal subjects), VA will be: 1/MAR =1/1’=1.0. If the smallest resolvable C has a gap of 5 min arc, the corresponding VA is lower: 1/5=0.2. Instead, if the gap of the smallest identifiable C subtends 10 min arc, VA will be 1/10=0.1.

A different notation is linear, and expresses VA as the Snellen fraction: d/D, where d is the distance in meters (or feet) at which the test is administered and D corresponds to the distance in meters (or feet) at which the gap of the ring subtends 1 min arc. If the chart is placed at 6 meters and the gap of the smallest resolvable symbol subtends 1 min arc at that distance, VA is 6/6=1.0. On the other hand, if the gap of the smallest resolvable symbol subtends 1 min arc at a distance of 60 meters, this means that the smallest resolvable C has an interruption wider than the previous one, and, in fact, VA is lower: 6/60=0.1. The Snellen fraction can be reported in decimal notation, for example: 6/6=1.0=10/10; 6/60=0.1=1/10.

This fraction corresponds to the ratio between the maximum distance at which the symbol is recognized by the observer and the (predetermined) distance of the symbol from the observer. So, if the predetermined distance of the symbol is 6 meters and the maximum distance at which the observer can recognize the symbol is 6 meters, VA will be 6/6=1.0. If the maximum distance at which the observer can identify the same symbol is reduced to 3 meters, VA is 3/6=0.5. Likewise, if the maximum distance at which the observer can correctly name the letter of the same size is only 1 meter, VA is 1/6=0.16.

To summarize:

where:

A0: angular size of 1 min arc for a normal vision;

A: minimum angle of resolution in min arc (MAR);

y: size of the gap (or of the stroke) of the ring;

d: test distance;

D: optotype distance at which the gap subtends 1 min arc.

Evidently, the structure of the optotypes is based on the transformation of the angular size into linear dimensions. So, the height of the symbol as a function of VA is obtained from the equation:

where:

H: height of the symbol in millimeters;

0.3: a factor that converts min arc to millirad;

d: test distance in meters;

VA: visual acuity (equal to the Snellen fraction).

The step size between consecutive lines must be at least 0.1 logarithmic unit (base 10), corresponding to variation in the angular size of the symbols by 10√10 =1.2589. A logarithmic rather than a linear progression of the step size helps keep the variance constant across the whole range of measurements (24).

The distance W between the symbols belonging to the same line is a function of their size S so that the ratio W/S is constant. In any case, the distance between symbols of the same line should never be less than their size, to minimize lateral interaction between adjacent letters. For the same reason, the distance between consecutive lines should never be less than the size of the symbols on the upper line.

Standardized criteria require that no less than five symbols should be presented on the same line, except in case the size does not prevent this for practical reasons; if there are more than five symbols per line, it is recommended to split them into two lines.

The test distance should be at least 4 meters, although this value does not represent infinity from a refractive point of view.

The observer is asked to consider each symbol of the line at a time, in random order, until all the letters or numbers of the line are examined. The test starts from the largest stimuli (uppermost line) and continues until the frequency of errors corresponds to the probability of guessing. Symbols can also be administered individually: in this case, each presentation should last three seconds, and intervals between subsequent presentations should not exceed four seconds. Presenting isolated stimuli leads to higher VAs than flanked stimuli, because the crowding effect is excluded.

ETDRS charts

The ETDRS (Early Treatment Diabetic Retinopathy Study) charts are the most accurate and reliable testing procedures among the alphabetical optotypes (25). ETDRS charts are made up of three alphabetical tables (one for the right eye, one for the left eye, and one for binocular vision). Each table consists of 14 lines, each made of 5 symbols (font: Sloan). The angular size in the lines decreases from top to bottom and the size of the symbols between consecutive lines is reduced by 0.1 logMAR. VA is estimated as the minimum angle of resolution on the logarithmic scale (logMAR), where logMAR =0.0 corresponds to VA =6/6=10/10 and logMAR =1.0 corresponds to 6/60=1/10. Alternatively, logMAR can be quantified as Visual Acuity Rating (26), according to the formula: VAR =100-50 logMAR. Therefore, logMAR =0.0 corresponds to VAR =100, while logMAR =1.0 corresponds to VAR =50.

It is noteworthy that the distance between the symbols decreases line after line so that the effect of the lateral masking remains the same. It is required to read the symbols starting from the first line, urging the subject to guess if in doubt. The examination continues until it is evident that the observer is no longer able to recognize the letters. The operator, who marked the recognized letters on a card identical to the ETDRS chart, computes the score. What is the best method of scoring is debated. After examining a cohort of normal subjects and patients with maculopathy, Vanden Bosch and Wall (27) compared three procedures:

- Letter-by-letter or ETDRS method (just described): VA is quantified as a function of the total number of recognized letters. LogMAR is computed by adding to the value corresponding to the line at which the observer identified at least 1 character, 0.02 for each uncorrected response. For example, if the subject recognizes 4 letters out of 5 in line 0.8 LogMAR, he will score 0.8+0.02=0.82.

- Line assignment method: VA corresponds to the line at which 3 symbols out of 5 have been correctly recognized and is expressed as the corresponding logMAR value (or Snellen fraction).

- Probit analysis: VA is estimated as the threshold, in terms of MAR, at which 50% of the letters with a given MAR are recognized. This threshold is obtained via probit analysis. Probit analysis is a regression model adopted to convert binary responses as a function of the signal intensity into units of probability, in order to obtain a linear regression between these units and the signal level.

Test-retest variability (measured as the mean standard deviation computed on six subsequent measurements of VA in the observers) for the line assignment method is found to be higher than for the other procedures (SD =0.049 vs. 0.035–0.038 logMAR) (27).

To speed up the examination without reducing sensitivity, other techniques (that require dedicated equipment) have been devised. We recall the ETDRS-Fast method and the Freiburg Visual Acuity Test, administered on a PC screen.

The ETDRS-Fast method

ETDRS-Fast (28) is an adaptive method that makes use of the ETDRS charts. Instead of reading sequentially all the letters from the first to the last line, only one letter per line is presented until, proceeding down the chart, a letter is missed. At this point, the strategy is the same as the conventional ETDRS method: the observer is asked to read the whole line preceding the one with the mistake. If the line is read with no more than one error, the next line (five letters) is administered according to the standard ETDRS method. If more than one mistake is made along the line, the previous line is proposed. The stopping rule conforms to the standard procedure. The difference between the first phase (presentation of one letter per line) and the second phase (presentation of all five letters of the line) lies in the criterion for passing to the next level: in the first phase, the passage to the next level is determined by the correct response to a single stimulus presented at the previous level [as in the up/down staircase method (29)]. In the second phase, the passage to the next level occurs if no more than one mistake is made in the total number of letters per line. The second phase, therefore, follows a rule that resembles Wald’s sequential probability ratio test (30) adopted in the PEST procedure (31).

This method is faster than the standard ETDRS since it requires 30% less time to complete the examination and the final estimate is comparable. Since it approaches the threshold more quickly, ETDRS-Fast is less demanding in terms of attention/concentration, with lower variability of the results in subsequent tests (better test-retest reliability) compared to the conventional procedure.

The Freiburg Visual Acuity and Contrast Test (FrACT)

FrACT (32,33) is an automated method for the self-assessment of VA. It makes use of Landolt Cs of known angular size presented on a monitor with eight possible orientations of the gap. The observer is forced to press the key corresponding to the correct orientation on a response box. FrACT makes use of the best PEST procedure for positioning the stimuli (34). It is therefore an 8-AFC response model, implicit variant, combined with a parametric adaptive procedure that assumes a psychometric function with a constant slope on a logarithmic acuity scale. The test ends after a predetermined number of trials [more than 18 trials per test run (35)]. Advanced computer graphics is employed to reproduce Landolt’s optotypes over the full range of acuity. This way, the technique can measure VA between 5/80 (0.06) and 5/1.4 (3.6), at a distance of 5 meters.

FrACT was proved to be a reliable and reproducible method, substantially equivalent to the classical Landolt charts, but capable of measuring visual acuity on a continuous scale, thereby not limited to discrete acuity steps as it occurs in the conventional procedures (36).

Preferential looking

The principle of preferential looking is that toddlers are prone to look at a structured configuration rather than at a homogeneous stimulus with identical mean luminance (37,38).

The Teller procedure (forced-choice preferential looking technique: FPL)

Teller’s preferential looking (39) was developed to assess VA in pre-verbal children. The toddler is placed in front of a target (a grating with a given spatial frequency and fixed contrast) and a null stimulus (a uniform equiluminant surface) presented on a panel. The technique is based on the operator’s judgment of the direction of the gaze of the toddler. VA is derived from the highest spatial frequency of the grating that evokes a preferential fixation (39) (Figure 6). The Teller procedure measures a grating (resolution) acuity because the observer’s behavioral response is obtained when he or she can resolve the bars of the serial pattern (39) (Figure 6).

The child is held in the parent’s arms, with the forehead 36 cm from the panel. A screen placed over the head of the child and in correspondence with the parent’s head prevents the latter from seeing the stimulus. The operator, placed behind the panel and able to see only the toddler’s face through a hole, has to establish the position (right or left) of the target by estimating the direction (right/left) of the infant’s gaze.

The number of tested spatial frequencies and the number of trials at each spatial frequency is predetermined. Background luminance is photopic.

At the end of the examination, the proportion of responses (the estimated direction, right or left) that corresponds to the presentation (right/left) of the target is computed (39).

The psychophysical procedure is similar to the method of constant stimuli, response model 2AFC. The detection threshold of the child is interpreted by the operator. The independent variable is the spatial frequency of the gratings, whose cut-off value corresponds to the VA of the child.

In a 2AFC response model, the target probability must be at least 75%. Therefore, the VA threshold corresponds to the spatial frequency at which the operator correctly indicated the direction of the toddler’s gaze 75% of the time.

Even if the reliability of the Teller procedure in children is not well established, it improves by increasing the number of trials, so that at least 20–25 trials for each spatial frequency are required to provide a reliable estimation of acuity (39). According to some studies, Teller acuity is higher than recognition acuity, especially in amblyopic children (40,41), whereas for other investigations it tends to underestimate recognition acuity (42,43). In turn, Moseley and colleagues found good agreement (44). Discrepancies are presumably due to the different age of the children tested by the authors (VA tends to improve as the child grows up) and to the different target probability assumed for the threshold (42). In addition, preferential looking measures a near visual acuity, which can be higher compared to far acuity, especially in the case of amblyopia (40,41).

The FPL procedure usually administers vertically–oriented gratings (see Figure 6). Since optical conditions, namely astigmatism may affect the visual threshold along different orientations, orientation-dependent blur is expected (45). In fact, better performance with horizontally or vertically oriented gratings in astigmatic children has been found (depending on the axis of their refractive error), while no effect was reported in a non-astigmatic cohort (46). In both cases (astigmatic and non-astigmatic), there is no preference for oblique orientations (46), in line with the fact that visual sensitivity to oblique orientations is lower, irrespective of optical factors (47). This physiological phenomenon is called the oblique effect and refers to the relative reduction in visual sensitivity for oblique contours and orientations compared to the cardinal axes. The oblique effect is not due to optical factors but depends on neural mechanisms in the low-level visual cortical areas: so, horizontally or vertically-arranged objects elicit a stronger cortical activation compared to obliquely oriented patterns. It follows that oblique gratings have a higher detection threshold than gratings oriented along the horizontal or the vertical (47).

The FPL diagnostic stripes procedure

For a faster VA examination, Dobson proposed a variant of the FPL that could be defined diagnostic stripes FPL procedure (48). In this version, the null stimulus consists of a grating with spatial frequency so low as to elicit gaze orientation even in case of very poor acuity. The signal is a grating with stripes made of higher spatial frequency, chosen based on the age of the toddler. By empirical evidence, this age-dependent diagnostic frequency should evoke a preferential gaze orientation in 97% of children of that age. The authors pinpoint that the diagnostic frequency does not correspond to the average acuity threshold at that age, but it is lower: it is expected, in fact, to elicit gaze orientation with a probability higher than the target probability for a 2AFC response model (97% instead of 75%). In sum, the diagnostic frequency is computed on the average performance of the population for a given age and not on the estimate of the threshold of each observer belonging to that population. In other words, the procedure of Dobson is a supra-threshold screening test aimed at ascertaining that the subject under examination performs as expected, that is to say like the average of his/her peers.

The technique of Fantz

In the late fifties, Fantz (38,49) devised a preferential looking procedure that differed from the technique of Teller: the operator behind the panel was required to estimate how long the toddler fixated the target, and to compare this interval with the duration of the fixation directed to the null stimulus. Fixation was assessed by observing the duration of the corneal reflex of the two stimuli. The child was administered one of four different spatial frequencies: first to the right, then to the left, and at the same time a null stimulus. The limited number of trials for each subject (only two trials per frequency per four frequencies =8 trials vs. 20 trials of the Teller technique) was justified by the fact that the goal of the study was not measuring VA in a patient but simply collect a large number of data to assess the average VA of toddlers.

Objective acuity: the VEP response

The subjective techniques, being psychophysical, require the active collaboration of the observer. Thus, in the case of toddlers or children with mental retardation or neurological impairment (as well as malingerers), subjective acuity measured with conventional optotypes has poor reliability or is not usable (50). Even if the estimate provided by preferential looking is generally consistent with ocular abnormalities (51) and shows adequate test-retest reliability in normal toddlers (52,53), the variability of the estimate increases in the presence of neurological/intellectual disabilities due to the difficulty in interpreting the observer’s behavioral response and/or to the presence of oculomotor dysfunctions (54,55).

Within this frame, alongside the classical psychophysical approach, inner psychophysics relies on the assumption that a sensation relative to a stimulation generates an underlying neurobiological activation of the sensory apparatus (1). In this respect, objective acuity can be estimated via the electrical potentials recorded from the visual cortex, in response to a detailed stimulus [visually evoked potentials (VEPs)]. The structured target (e.g., a grating or a checkboard) results in the excitation of the cortical cells, whose activity is recorded by the examiner as a change in the electrical activity through electrodes placed on the scalp of the observer. Since the response is not evoked if the target is not resolved, this technique relies on a resolution task (grating acuity).

VEP is successfully employed to test non-collaborative observers and severely handicapped patients (56). However, the visual potentials evoked by the structured stimulation can be biased by neurological deficits that involve the visual domain (57). In addition, complex and expensive instrumentation and expert examiners are required, so more rapid and user-friendly procedures for assessing objective acuity are desirable.

Objective acuity: the optokinetic nystagmus and the Oktotype

The optokinetic nystagmus is a physiological oculomotor reflex due to a serial stimulation (repetitive stimulus) in the visual field. It consists of a slow pursuit phase followed by fast re-fixation movements. For the optokinetic response to be evoked, the serial pattern must be resolved. In this case, evidently, active collaboration and verbal response by the examinee are not required. So, the optokinetic response can be exploited to assess VA in preverbal children or non-cooperative subjects. Although this is not a psychophysical test but an objective examination, for the sake of completeness it will be briefly discussed in this paper.

The procedure is based on the observation of the optokinetic response evoked by a striped pattern with known spatial frequency in constant translational movement, generally horizontal. Data are obtained by direct inspection of the proband’s gaze (58-61) or with the aid of electro-oculographic recordings (62). VA is estimated by progressively increasing the spatial frequency of the stripes (i.e., by reducing their width). Below a certain width, in fact, the stripes are no longer detected: the serial pattern will be perceived as uniform, preventing the optokinetic movement. This way, the highest spatial frequency that evokes the optokinetic response can be used to compute the visual acuity of the subject. Visual acuity for a given age can be computed from the smallest spatial frequency at which nystagmus persists in at least 75% of the subjects.

Two procedures based on the optokinetic nystagmus have been proposed to assess VA:

- The induction method (just described): serial stimuli are displayed, consisting of vertical dark and white shifting stripes; the spatial frequency of the grating is progressively increased until the nystagmus is no longer detected.

- The suppression method: a moving grating with a spatial frequency above the threshold and low enough to evoke the optokinetic response is presented; a suppressive stimulus (a spot) is superimposed on the grating. The size of the spot is progressively increased until the ocular response disappears. The estimate of objective VA is a function of the minimum size of the spot that suppresses the reflex. The assumption upon which the suppression method relies is that over a certain angular dimension the perception of the suppressive stimulus exerts an inhibitory effect on the optokinetic reflex (63).

The Oktotype (60,61) is a new technique developed by Aleci to measure objective VA. It makes use of a serial stimulation made of symbols organized in a linear periodic scheme that moves horizontally to evoke the optokinetic response. The linear periodic pattern is quantified in terms of spatial frequency [symbols/unit of space (Figure 7)].

Eye movements are detected by a camera mounted on a trial frame. Ascending (higher spatial frequency) and descending (lower spatial frequency) series of stimuli are proposed to identify the maximum spatial frequency capable of evoking the optokinetic response (Minimum Angular size Evoking the optokinetic Reflex: MAER), according to the method of limits.

Based on preliminary results, the Optotype seems a promising tool to estimate visual acuity in non-collaborative subjects (60,61).

Dynamic visual acuity

Since the aforementioned tasks aim at measuring the resolution ability of the visual system for stationary details, the proper term to be used should be static (or spatial) VA assessment. In fact, discriminating moving details is a main requisite in daily life. A substantial difference between static and dynamic visual acuity is that the contribution of the peripheral retina to dynamic vision is much greater than for the discrimination of static details: static details, in fact, mostly recruit the central part of the retina (the macular region). As far as we know, dynamic vision is not assessed in the routine eye-care practice, and yet it deserved to be mentioned for sake of completeness.

The term dynamic VA refers to the ability to distinguish details in case of a relative movement between the object and the observer, for example, a moving optotype or a static chart to be read during the head movement (64). Dynamic acuity is an indicator of the vestibulo-ocular response and the quality of motion perception (65). For this reason, it can be used to assess the visual function in athletes (sport-vision) or otolaryngologic field (65).

Conclusions

Visual acuity is a fundamental semeiologic parameter in the eye care practice, and its assessment is the most adopted psychophysical procedure to investigate the functional state of the eye. In fact, the main reason a person undergoes an ophthalmological examination is the subjective reduction of the ability to see. Visual acuity can be estimated subjectively or, less frequently, with objective methods. In the first case, measurement takes place through adaptive or non-adaptive psychophysical procedures and the estimated acuity threshold corresponds to the signal value that provides a predefined probability of correct responses. The evaluation is not simple because the perceptual threshold fluctuates, depending on physiological changes in sensitivity, psychological and physical conditions, as well as environmental factors. These parameters require consideration and, as far as possible, should be controlled.

In clinical practice, visual acuity refers to the ability to read letters, numbers, or other symbols on a chart: therefore, it is a recognition task. The ETDRS charts with logarithmic step size are considered the gold standard among the alphabetic optotypes for clinical and research purposes.

The Landolt C (a reference by international standards for estimating visual acuity) or the tumbling E optotypes are suitable to test illiterate or pre-verbal subjects. The observer’s response model is in both cases an alternative forced-choice procedure.

Objective methods rely mainly on preferential looking, VEP recording, and the optokinetic response: these procedures, including the Oktotype, can be suitable in the case of non-collaborative patients and for legal aspects.

As shown, the theoretical and mathematical principles that characterize psychophysics are a material point to be considered when testing visual acuity. Nevertheless, the current clinical practice tends to be based on heuristic criteria. Computer-aided strategies strictly relying on psychophysical principles will help the examiner obtain more accurate, repeatable, and efficient acuity measurements. Likewise, faster objective approaches continue to evolve, becoming more and more reliable in predicting the subjective acuity threshold.

Acknowledgments

Funding: None.

Footnote

Peer Review File: Available at https://aes.amegroups.com/article/view/10.21037/aes-22-25/prf

Conflicts of Interest: Both authors have completed the ICMJE uniform disclosure form (available at https://aes.amegroups.com/article/view/10.21037/aes-22-25/coif). CA reports royalties paid for the development of the Tetra Analyzer, royalties due for 4 books and paid lectures on dyslexia and perception in the past 36 months. None of them related to the content of the current manuscript. He certifies that the prototype “oktotype”, cited at the end of the manuscript, is not a trademark and it is not commercially available. He declares no patents planned, issued or pending for the TETRA and for the oktotype. The other author has no conflicts of interest to declare.

Ethical Statement:

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Aleci C. Measuring the Soul-Psychophysics for non-Psychophysicists, EDP Sciences, Les Ulis, France, 2020.

- Green DA, Swets JA. Signal detection theory and psychophysics. Oxford: John Wiley, 1966.

- Bouma H. Interaction effects in parafoveal letter recognition. Nature 1970;226:177-8. [Crossref] [PubMed]

- Swaine W. The relation of visual acuity and accommodation to ametropia. Transactions of the Optical Society 1925;27:9-27. [Crossref]

- Smith G. Relation between spherical refractive error and visual acuity. Optom Vis Sci 1991;68:591-8. [Crossref] [PubMed]

- Parish DH, Sperling G. Object spatial frequencies, retinal spatial frequencies, noise, and the efficiency of letter discrimination. Vision Res 1991;31:1399-415. [Crossref] [PubMed]

- Campbell FW, Robson JG. Application of Fourier analysis to the visibility of gratings. J Physiol 1968;197:551-66. [Crossref] [PubMed]

- Regan D, Raymond J, Ginsburg AP, et al. Contrast sensitivity, visual acuity and the discrimination of Snellen letters in multiple sclerosis. Brain 1981;104:333-50. [Crossref] [PubMed]

- Legge GE, Pelli DG, Rubin GS, et al. Psychophysics of reading--I. Normal vision. Vision Res 1985;25:239-52. [Crossref] [PubMed]

- Bondarko VM, Danilova MV. What spatial frequency do we use to detect the orientation of a Landolt C? Vision Res 1997;37:2153-6. [Crossref] [PubMed]

- Majaj NJ, Pelli DG, Kurshan P, et al. The role of spatial frequency channels in letter identification. Vision Res 2002;42:1165-84. [Crossref] [PubMed]

- Alexander KR, McAnany JJ. Determinants of contrast sensitivity for the tumbling E and Landolt C. Optom Vis Sci 2010;87:28-36. [Crossref] [PubMed]

- Sloan LL, Rowland WM, Altman A. Comparison of three types of test target for the measurement of visual acuity. Q Rev Ophthalmol Otorhinolaryngol 1952;8:4-16.

- Raasch TW, Bailey IL, Bullimore MA. Repeatability of visual acuity measurement. Optom Vis Sci 1998;75:342-8. [Crossref] [PubMed]

- Sheedy JE, Bailey IL, Raasch TW. Visual acuity and chart luminance. Am J Optom Physiol Opt 1984;61:595-600. [Crossref] [PubMed]

- Wittich W, Overbury O, Kapusta MA, et al. Differences between recognition and resolution acuity in patients undergoing macular hole surgery. Invest Ophthalmol Vis Sci 2006;47:3690-4. [Crossref] [PubMed]

- Bennett AG. Ophthalmic test types. A review of previous work and discussions on some controversial questions. Br J Physiol Opt 1965;22:238-71. [PubMed]

- Reich LN, Ekabutr M. The effects of optical defocus on the legibility of the Tumbling-E and Landolt-C. Optom Vis Sci 2002;79:389-93. [Crossref] [PubMed]

- Becker R, Gräf M. Landolt C and Snellen E acuity: differences in strabismus amblyopia? Klin Monbl Augenheilkd 2006;223:24-8. [Crossref] [PubMed]

- Plainis S, Kontadakis G, Feloni E, et al. Comparison of visual acuity charts in young adults and patients with diabetic retinopathy. Optom Vis Sci 2013;90:174-8. [Crossref] [PubMed]

- Rosenfield M, Logan N. Optometry: science, techniques and clinical management. Butterwoth Heinemann Elsevier, 1988.

- Grimm W, Rassow B, Wesemann W, et al. Correlation of optotypes with the Landolt ring-a fresh look at the comparability of optotypes. Optom Vis Sci 1994;71:6-13. [Crossref] [PubMed]

- Ferris FL 3rd, Freidlin V, Kassoff A, et al. Relative letter and position difficulty on visual acuity charts from the Early Treatment Diabetic Retinopathy Study. Am J Ophthalmol 1993;116:735-40. [Crossref] [PubMed]

- Westheimer G. Scaling of visual acuity measurements. Arch Ophthalmol 1979;97:327-30. [Crossref] [PubMed]

- Ferris FL 3rd, Kassoff A, Bresnick GH, et al. New visual acuity charts for clinical research. Am J Ophthalmol 1982;94:91-6. [Crossref] [PubMed]

- Bailey IL. Measurement of visual acuity-towards standardization. In Vision Science Symposium, A Tribute to Gordon G. Heath. Bloomington: Indiana University, 1988:217-30.

- Vanden Bosch ME, Wall M. Visual acuity scored by the letter-by-letter or probit methods has lower retest variability than the line assignment method. Eye (Lond) 1997;11:411-7. [Crossref] [PubMed]

- Camparini M, Cassinari P, Ferrigno L, et al. ETDRS-fast: implementing psychophysical adaptive methods to standardized visual acuity measurement with ETDRS charts. Invest Ophthalmol Vis Sci 2001;42:1226-31. [PubMed]

- Dixon WJ, Mood AM. A method for obtaining and analyzing sensitivity data. J Am Stat Assoc 1948;43:109-26. [Crossref]

- Wald A. Sequential Analysis. New York: John Wiley & Sons Eds, 1947.

- Taylor MM, Creelman CD. PEST: Efficient estimates on probability functions. J Acoust Soc Am 1967;41:782-7. [Crossref]

- Bach M. The Freiburg Vision Test. Automated determination of visual acuity. Ophthalmologe 1995;92:174-8. [PubMed]

- Bach M. The Freiburg Visual acuity test-automatic measurement of visual acuity. Optom Vis Sci 1996;73:49-53. [Crossref] [PubMed]

- Pentland A. Maximum-likelihood estimation: The best PEST. Percept Psychophys 1980;28:377-9. [Crossref] [PubMed]

- Bach M. The Freiburg Visual Acuity Test-variability unchanged by post-hoc re-analysis. Graefes Arch Clin Exp Ophthalmol 2007;245:965-71. [Crossref] [PubMed]

- Wesemann W. Visual acuity measured via the Freiburg visual acuity test (FVT), Bailey Lovie chart and Landolt Ring chart. Klin Monbl Augenheilkd 2002;219:660-7. [Crossref] [PubMed]

- BERLYNE DE. The influence of the albedo and complexity of stimuli on visual fixation in the human infant. Br J Psychol 1958;49:315-8. [Crossref] [PubMed]

- Fantz RL. Pattern vision in young infants. Psychol Rec 1958;8:43-7. [Crossref]

- Teller DY. The forced-choice preferential looking procedure: A psychophysical technique for use with human infants. Infant Behav Dev 1979;2:135-53. [Crossref]

- Kushner BJ, Lucchese NJ, Morton GV. Grating visual acuity with Teller cards compared with Snellen visual acuity in literate patients. Arch Ophthalmol 1995;113:485-93. [Crossref] [PubMed]

- Paik HJ, Shin MK. The Clinical Interpretation of Teller Acuity Card Test in the Diagnosis of Amblyopia. J Korean Ophthalmol Soc 2001;47:1030-6.

- Joo HJ, Yi HC, Choi DG. Clinical usefulness of the teller acuity cards test in preliterate children and its correlation with optotype test: A retrospective study. PLoS One 2020;15:e0235290. [Crossref] [PubMed]

- Hartmann EE, Ellis GS Jr, Morgan KS, et al. The acuity card procedure: longitudinal assessments. J Pediatr Ophthalmol Strabismus 1990;27:178-84. [Crossref] [PubMed]

- Moseley MJ, Fielder AR, Thompson JR, et al. Grating and recognition acuities of young amblyopes. Br J Ophthalmol 1988;72:50-4. [Crossref] [PubMed]

- Murray IJ, Parry NR, Ritchie SI, et al. Importance of grating orientation when monitoring contrast sensitivity before and after refractive surgery. J Cataract Refract Surg 2008;34:551-6. [Crossref] [PubMed]

- Atkinson J, French J. Astigmatism and orientation preference in human infants. Vision Res 1979;19:1315-7. [Crossref] [PubMed]

- Appelle S. Perception and discrimination as a function of stimulus orientation: the "oblique effect" in man and animals. Psychol Bull 1972;78:266-78. [Crossref] [PubMed]

- Dobson V, Teller DY, Lee CP, et al. A behavioral method for efficient screening of visual acuity in young infants: I. preliminary laboratory development. Invest Ophthalmol Vis Sci 1978;17:1142-50. [PubMed]

- Fantz RL, Ordy JM, Udelf MS. Maturation of pattern vision in infants during the first six months. J Comp Physiol Psychol 1962;55:907-17. [Crossref]

- Lawson LJ Jr, Schoofs G. A technique for visual appraisal of mentally retarded children. Am J Ophthalmol 1971;72:622-4. [Crossref] [PubMed]

- Lennerstrand G, Axelsson A, Andersson G. Visual acuity testing with preferential looking in mental retardation. Acta Ophthalmol (Copenh) 1983;61:624-33. [Crossref] [PubMed]

- Jacobsen K, Grøttland H, Flaten MA. Assessment of visual acuity in relation to central nervous system activation in children with mental retardation. Am J Ment Retard 2001;106:145-50. [Crossref] [PubMed]

- Getz LM, Dobson V, Luna B, et al. Interobserver reliability of the Teller Acuity Card procedure in pediatric patients. Invest Ophthalmol Vis Sci 1996;37:180-7. [PubMed]

- Hertz BG, Rosenberg J. Effect of mental retardation and motor disability on testing with visual acuity cards. Dev Med Child Neurol 1992;34:115-22. [Crossref] [PubMed]

- Black P. Visual disorders associated with cerebral palsy. Br J Ophthalmol 1982;66:46-52. [Crossref] [PubMed]

- Lennerstrand G, Axelsson A, Andersson G. Visual assessment with preferential looking techniques in mentally retarded children. Acta Ophthalmol (Copenh) 1983;61:183-5. [Crossref] [PubMed]

- Odom JV, Green M. Visually evoked potential (VEP) acuity: testability in a clinical pediatric population. Acta Ophthalmol (Copenh) 1984;62:993-8. [Crossref] [PubMed]

- Gorman JJ, Cogan DG, Gellis SS. An apparatus for grading the visual acuity of infants on the basis of optokinetic nystagmus. Pediatrics 1957;19:1088-92. [Crossref] [PubMed]

- Gorman JJ, Cogan DG, Gellis SS. A device for testing visual acuity in infants. Sight-Saving Rev 1959;29:80-4.

- Aleci C, Scaparrotti M, Fulgori S, et al. A novel and cheap method to correlate subjective and objective visual acuity by using the optokinetic response. Int Ophthalmol 2018;38:2101-15. [Crossref] [PubMed]

- Aleci C, Cossu G, Belcastro E, et al. The optokinetic response is effective to assess objective visual acuity in patients with cataract and age-related macular degeneration. Int Ophthalmol 2019;39:1783-92. [Crossref] [PubMed]

- Dayton GO Jr, Jones MH, Aiu P, et al. Developmental study of coordinated eye movements in the human infant. I. Visual acuity in the newborn human: a study based on induced optokinetic nystagmus recorded by electro-oculography. Arch Ophthalmol 1964;71:865-70. [Crossref] [PubMed]

- Hyon JY, Yeo HE, Seo JM, et al. Objective measurement of distance visual acuity determined by computerized optokinetic nystagmus test. Invest Ophthalmol Vis Sci 2010;51:752-7. [Crossref] [PubMed]

- Palidis DJ, Wyder-Hodge PA, Fooken J, et al. Distinct eye movement patterns enhance dynamic visual acuity. PLoS One 2017;12:e0172061. [Crossref] [PubMed]

- Wu TY, Wang YX, Li XM. Applications of dynamic visual acuity test in clinical ophthalmology. Int J Ophthalmol 2021;14:1771-8. [Crossref] [PubMed]

Cite this article as: Aleci C, Rosa C. Psychophysics in the ophthalmological practice—I. visual acuity. Ann Eye Sci 2022;7:37.